But now smart assistants (e.g., Google’s Assistant, Amazon’s Alexa and Apple’s Siri), which utilize voice recognition and artificial intelligence, add additional concerns.

Physicians and providers need to ascertain whether or not the smart assistant meets the Security Rule’s technical, administrative and physical safeguards. They need to make sure that the recorded audio and the privacy statements are not being shared with third parties. They must also ensure that the privacy statements meet the requirements of state, federal and international laws.

In April, Amazon announced the roll out of six new HIPAA compliant skills from Express Scripts, Cigna, Livongo, Atrium Health, Providence St. Joseph Health and Boston Children’s Hospital. However, whether or not smart assistants are HIPAA compliant appears to have mixed reactions. Physicians and other providers should be cautioned that the alleged HIPAA complaint that Alexa “can now be used by a select group of healthcare organizations to communicate PHI without violating the HIPAA Privacy Rule,” according to the HIPPA Journal.

Recently, Google learned that a state’s law may also create liability and that data transfers differ from the sharing or selling of the audio to a third party. In July, a class action was filed against Google for violations of the Illinois Biometric Information Privacy Act (IBIPA). In essence, the class of plaintiffs allege that Google violated the IBIPA by sharing audio that was recorded from their Google Assistant-enabled devices with third parties.

The July 15, 2019, pleading alleges that “[u]nfortunately, Google disregards these statutorily imposed obligations and fails to inform persons that a biometric identifier or biometric information is being collected or stored and fails to secure written releases executed by the subject or the subject’s legally authorized representative.” This statement alone raises a myriad of issues for providers to consider as part of their due diligence.

Here are six questions to ask yourself before utilizing any smart assistant:

· What do the privacy statements say, and what is being agreed to?

· Has a risk analysis been done, and are the Privacy Rule and Security Rule requirements being met?

· Can the items that are recorded be subpoenaed in a legal proceeding? (The answer is yes.)

· Has consent been given by the patient to be recorded?

As healthcare technology and legal landscape become increasingly complex, providers should take a deep breath and consider the basics. Conducting an annual risk analysis along with adequate due diligence on products and business associates/subcontractors can mitigate risk and enable a provider to make an informed decision while complying with a variety of laws.

Artificial intelligence has great potential in healthcare. When in doubt about its legality, explain the device to patients, how it is being utilized and get their consent.

20% Off Medical Practice Supplies

VIEW ALL

Manual Prescription Pad (Large - Yellow)

Manual Prescription Pad (Large - Yellow) Manual Prescription Pad (Large - Pink)

Manual Prescription Pad (Large - Pink) Manual Prescription Pads (Bright Orange)

Manual Prescription Pads (Bright Orange) Manual Prescription Pads (Light Pink)

Manual Prescription Pads (Light Pink) Manual Prescription Pads (Light Yellow)

Manual Prescription Pads (Light Yellow) Manual Prescription Pad (Large - Blue)

Manual Prescription Pad (Large - Blue)

__________________________________________________

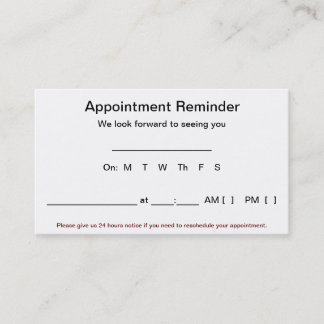

Appointment Reminder Cards

$44.05

15% Off

$56.30

15% Off

$44.05

15% Off

$44.05

15% Off

$56.30

15% Off

Build a connected real-time ecosystem within your healthcare application by integrating customizable chat APIs & SDKs to enable patients to reach out to you at any time.

ReplyDeletehealthcare messaging app